Robots.txt is a standard used in web development that serves as a guideline for web robots, usually search engine[2] crawlers, navigating a website[4]. Proposed by Martijn Koster in 1994, it functions as a communication tool, asking robots to avoid specific files or sections of the website. This file is placed at the root of a website and is particularly important to search engine optimization[1] (SEO) strategies as it helps control what parts of the site are indexed. While there is no legal or technical enforcement mechanism, compliance with this standard is crucial for efficient and secure website crawling. It's noteworthy that the standard has evolved over time, with updates reflecting changing webmaster[3] needs, and that understanding its nuances is imperative for effective SEO.

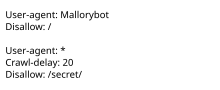

robots.txt is the filename used for implementing the Robots Exclusion Protocol, a standard used by websites to indicate to visiting web crawlers and other web robots which portions of the website they are allowed to visit.

The standard, developed in 1994, relies on voluntary compliance. Malicious bots can use the file as a directory of which pages to visit, though standards bodies discourage countering this with security through obscurity. Some archival sites ignore robots.txt. The standard was used in the 1990s to mitigate server overload; in the 2020s many websites began denying bots that collect information for generative artificial intelligence.

The "robots.txt" file can be used in conjunction with sitemaps, another robot inclusion standard for websites.